The 5 Most Important Things to consider when Automating Machine Builds to be Consumed from a Catalog

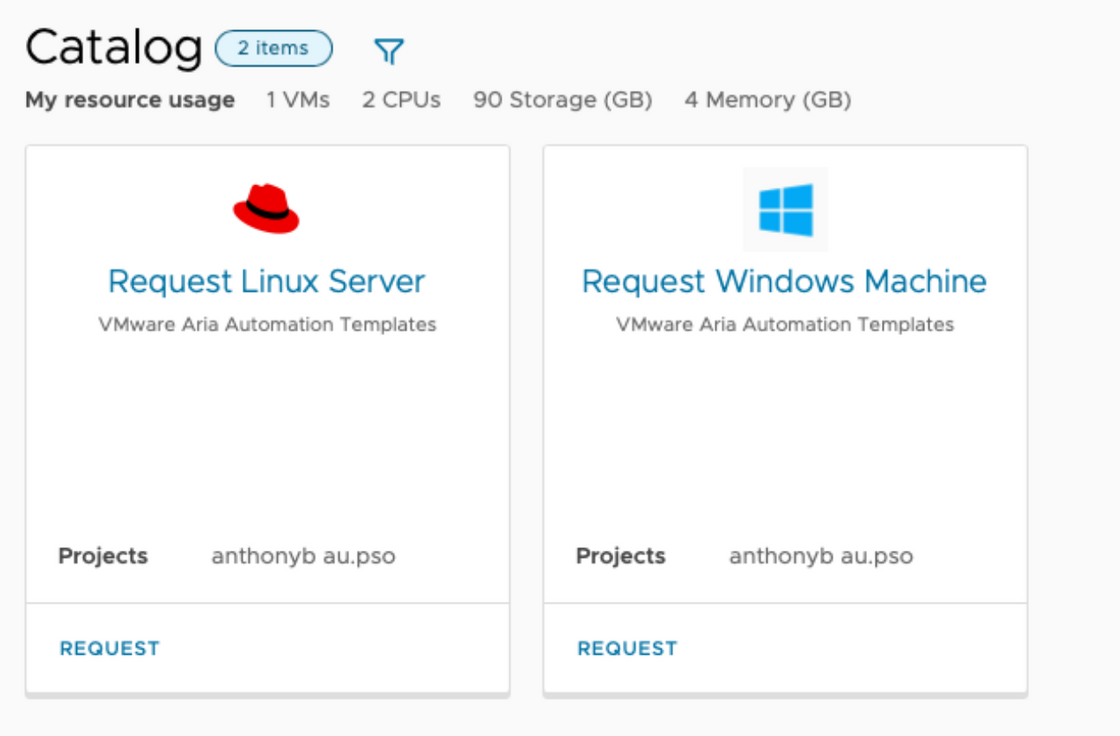

In this article I am going to discuss what I think are the 5 most important things you need to consider when Automating machine / server / VM builds from a catalog.

I always consider this firstly from the End User / Customer who will be using the Catalog and then from those that have to manage and troubleshoot the machines that result from the build. Sometimes if the infrastructure team is automating the build it is tempting to put the Customer second and our own needs first, don't fall in to that trap. Customer is always king.

1. SPEED OF YOUR DEPLOYMENT

I feel the need, the need for speed.

The most important thing driving me when automating is SPEED. I think it is also the most underestimated factor of a server build, especially by infrastructure teams. Often because once the server build is happening and after it is successful, the priority is just to make sure it has everything in it and say Job Done. I would argue from the outset to the time the build is really finished the speed of deployment should be a defining factor.

If you want automated builds to be used especially from a consumer in a Catalog, the faster the deployment the better, especially when the end user is a developer. I target Sub 15 minute builds as a starting point. Preferably 10 minutes, and then start factoring in installing software. If your build is taking 30+ minutes, I would start working out how it can be faster as a priority. If its between 15-30 minutes, make sure every future iteration improves on this and it doesn't continue to increase.

For a minute, imagine you are a developer requesting a machine from the catalog. If we look at it from this point of view you might think: I have multiple ways to work, I can write code on my laptop, I can stand up VMs on my laptop in Vagrant, VM workstation or something similar and write code there. I am used to a Cloud like service, chances are I have built and worked on code in AWS, Azure, Digital Ocean or similar. I expect machines in less than 15 minutes and if I don't get them at least that fast I just won't use your service, I'll keep doing what I'm doing or go somewhere else where I get what I want.

You want your developers using server builds from your catalog don't you? You are doing this for a reason, right? If users aren't using your catalog build it doesn't matter how great it is, it won't get used and all those benefits you are counting on for cost saving won't be realised. If you get the developer to use your build you can do all those things management think you are doing, like providing an agile cloud like service while controlling where machines are deployed, controlling the security of the machines, and making sure that the machine being built is very similar to the machine that will exist in production, where the code will ultimatley run.

Be realistic when calculating your server build time, think how your customer would think. If your server build says its complete in 12 minutes in the Catalog/Automation tool, that is not the whole story. If you can't start your server build process in the catalog because of some prerequisite like acquiring an IP address and that takes a week because you need to send an email to the IP networking spreadsheet god then that process needs to be added to your server build time (i.e. build time = a week and 12 minutes). The same goes if additional time is needed for other prereqs, patching, software install, customization, group policy, configuration mangement, or anything else.

Yes, baking everything in the template will speed things up and sometimes for common things makes sense, but this may also lead to Template sprawl adding technical debt. This is a trade off. Even though speed is incredibly important, acceptable exceptions can be made to minimise sprawl but maximise speed. However, if the only answer you have for speeding up builds is to build everything in to a new template, then maybe some more diverse thinking is needed.

In short, Speed should always be front of mind during the design and implemenation of an automated build. The overall speed of deployment is very important, to the point you should and need to be ruthless at times, but speed shouldn't come at any cost.

2. SPEED OF FAILURE / CONSISTENTLY SUCCESSFUL BUILDS

Yes the top two are both based on Speed, if you aren't deploying fast enough, no-one will use your service unless they are forced to, and thats not why you want people using your Catalog.

Speed of Failure is about making sure any failure that may occur in the build process, happens as soon as possible, so the customer can correct the issue and request it again. If your builds are 15 minutes but they often fail around the 14 minute mark, then you have a 29 minute build time in my book.

The best time to fail is before deployment or not at all (hence consistently successful builds), the easiest way to do that is field validation on the request page. If the end user can't type in an input or combination of inputs that aren't allowed, or gets an on page error if they do, this is the best possible outcome. As you have avoided the failure altogether. I regularly see tools being used that don't allow form validation and tools that do allow but are not set up to do form validation and I see wasted opportunity. Make sure you have a tool that allows it, and use it.

The next best thing is if you know something might fail late in your build due to something you can't validate on the form, but you can validate it using some other process (are you sure you can't do it on the form?), is to do the validation step as soon as the build starts, that way it can fail in the first 2 minutes.

This may seem like a small thing to you but trust me it won't to users.

3. FINISHED STATUS MEANING SUCCESSFULLY COMPLETE

There is nothing I hate more (other than a lack of speed) than my catalog saying my server built succesfully, and then I log in and the build has not completed at all. My software is not installed and my server is going through an initial 2 hour patch cycle. That or there are 5 additional manual steps I need to run after every one of my server builds to make it workable.

If your server build takes 15 minutes to say complete, but the software doesn't finish installing until 20 minutes later then you have a 35 minute build time. I have seen software install triggering processes add hours to the real build time of a server. If the Catalog tool says my SQL server is built, I expect to be able to log in immediatley and have SQL Ready to go.

What is important here is:

- The tool you are using for your Catalog, and how you are using it. (Think Aria Automation, Service Now etc.)

- The tool you are using to deploy the configuration and how you are using it. (Think Aria Automation Config (SaltStack), Puppet, Chef, Group Policy, Satelitte, Chef, CloudConfig)

- Whatever you are using to install the software (The above configuration tool or a script in combination with a binary repo; or something like Microsoft ECM; or building it in to the image/template (thats a whole blog article in itself see the discussion at the bottom of Point 1) and how you are using those tools.

- The feedback loop between the Catalog tool and the other tools.

Mistakes I see made are usually either picking a tool that has a bad feedback loop; picking a tool that has a long discovery cycle between a machine wanting the software installed and the time it is actually installed; or not spending enough time ensuring that the feedback loop is an important part of the automation process.

4. CONSISTENT REPEATABLE BUILDS

It is in everyone's best interest to get this right. Whether you are the cusotmer, the automater, the OS team, the infrastructure team or the helpdesk, it is always easier to use and troubleshoot a server if the server builds are almost identical every time.

This is one of the biggest advantages of using automated server builds, the only reason it comes in at number 4 is because the top 3 are about making sure your Automated Build Catalog item is used in the first place, once it is used this is the major benefit.

How can we help achieve this:

- Minimal Template variation across Catalog items

- An automated, well known, well designed, documented and regularly run Template creation process, to ensure the gold templates are up to date and pristine.

- A well designed automated template distribution process to ensure consistency of templates no matter the geo or environment.

- Configuration that changes depending on factors like geo, system, environment being managed in the configuration or orchestration layer not in the template.

- A daily test build at the start of the day, so you know if their issues before the first machine is built.

- A successful test build to be part of any new gold template becoming the bedrock of all of your automation builds (automating this using unit testing is possible)

- Post build Configuration is consistently applied in a consistent method, using consistent tools.

Note Template and configuration planning should be done considering whether you will be using traditional On-prem, a single cloud provider or MultiCloud (including an On-premise cloud), and what suits the providers you are using. I don't cover this off in this article.

5. THE TEARDOWN PROCESS SHOULD BE AS THOROUGH AS THE BUILD PROCESS

This is the spot where debate really starts for me to the next most important thing. There are a few things that could make this spot, but for right now this is my next most important thing.

This probably has a lot to do with me spending the first half of my career in operations teams often either being given or delegating the responsibility of things like cleaning up DNS, Loadbalancer VIPs, Domain joined Computer accounts, Service Accounts and a whole lot of other housekeeping noone really wants to do.

The above tasks should never have to be done once building and tearing down builds using automation begins. It is also one of the biggest differences I see between both a mature automation process and a mature Automator or DevOps practioner. This is a big part of the Ops in Dev Ops.

It is also the biggest shortfalling of out of the box functionality with Config Tools that don't have an end to end Automation tool in front of them. Once the deployment is deployed, if the idea of a "Deployment" with metadata like machine ids, ip address and other similar artifacts doesn't exist. Then how do you tear it down later. I'm not saying you can't do this in Config tools, just saying this is out of the box in Automation tools, and needs to be thought of when using Config tools in isolation.

Whatever you add as part of an automated build needs to be torn down as part of an automated teardown of that build. While you are reading the list below think to yourself, does my current automated server teardown process also automatically teardown these things.

The most common items that need tearing down applying to Server Builds:

- IP addresses (Public or Private) from IPAM and or DNS

- The Computer accout from LDAP or Active Directory

- Local admin or root passwords or similar local accounts from your Credential Repository. (they should be different on every server, with automation there should be no excuse not to do this)

- Load balancer constructs like updating Pool membership or removing VIPs, Pools and Monitors altogether.

- Backup schedules from your backup tool.

- Any licenses being consumed by the machine that need removing from associated licensing tools.

- Entries from Configuration Management and Patching tools

- Entries from Firewall rules specific to the machines in the deployment (hopefully this is tag based in NSX so its easy or a non issue)

Note: if you have an archival period where someone can reinstate a machine before its deleted, then the teardown process should not start or be triggered until AFTER the archival period is reached.

Thanks for reading my article,

Liked it? Let me know, its nice to know I helped you.

Disagree? Let me know, a range of opinions and perspectives is always refreshing and can lead to positive growth. I'd love to hear from you.

You can do either via comment or reach out to me on LinkedIn (links are on the About Me page).